To bypass the access error, we will use proxy rotation and repeat mode for the walk command. Namely, configure the parameters of the captcha_resolve command.

Generalized webscraper code#

In addition, you will have to change the scraper code a little. You will also need own account in one of these services. If you want to run the compiled digger on your computer, you will need to use one of the integrated services to solve the captcha: Anti-captcha or 2Captcha.

Generalized webscraper free#

Since this mechanism works as a microservice, it is available only when running the digger in the cloud, but it is free for all users of the Diggernaut platform. We will bypass the captcha with our internal captcha solution. Therefore, for the scraper to work successfully, we need to think about how it will catch and bypass these cases. For example, Amazon may show a captcha or a page with an error. Amazon can temporarily block the IP from which automated requests go. There is one more thing about which we want to tell you.

To reduce the chance of blocking, we will also use pauses between requests. Of course, if you run the web scraper in the cloud. In this case, mixed proxies from our pool will be used. If you do not need a targeting by country, you can omit any settings in the proxy section.

Generalized webscraper how to#

How to use them is described in our documentation in the link above. With a free account, you can use own proxy server. However, it only works with paid subscription plans. In our Diggernaut platform, you can specify geo-targeting to a specific country using the geo option. Therefore, if you are interested in information for the US market, you should use a proxy from the USA. Important points before starting developmentĪmazon renders the goods depending on the geo-factor, which is determined by the client’s IP address. Or, if you do not want to spend your time, you have the opportunity to hire our developers.

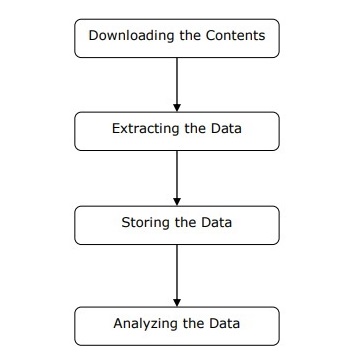

If you wish, you can expand the dataset to be collected on your own. The tool will be designed to collect basic information about products from a specific category. Today we are going to build a web scraper for. Over 10 years of experience in data extraction, ETL, AI, and ML. Mikhail Sisin Follow Co-founder of cloud-based web scraping and data extraction platform Diggernaut.

0 kommentar(er)

0 kommentar(er)